February 9, 2021

Immersive technologies are proving great at reducing the amount of hands-on training required to operate lift equipment or fly a plane, but can someone become certified in a trade by training in VR alone?

JLG has a very realistic VR simulation that even incorporates real controls, but the industry standard requires hands-on training. If VR were to supplant traditional training, it would have to be as close to the real thing as possible. There aren't any standards, however, for high-fidelity VR or a grading system for VR training sims. How do we get to that point?

No Hard Feelings

Currently in VR, you can tour a building before it's built, test drive a car, and practice assembling a jet engine from scratch. You can't, however, run your hand along a velvet sofa, judge the comfort of a vehicle's interior, or feel the parts of a machine correctly locking into place. Touch is incredibly important to how we interact with and understand the world. Of all our senses, it's probably the most overlooked and least understood.

How does touch work? Specialized receptors all over our skin (there are 3,000 just in each fingertip) sense things like temperature, pain, pressure, texture, and vibration. That information is then encoded into electrical signals that travel to the brain, where they're interpreted as sensations. Neuroscientists aren't exactly sure how the brain decodes sensory information, but the effects can be physical, emotional, or cognitive. To put it simply: Somatosensation or the sense of touch is a collection of sensations enabling humans to form social bonds, perform behaviors relying on fine motor skills, and more. If you were to lose your sense of touch, you would be unable to sit up or walk.

Studies show that Virtual Reality can change how someone thinks and behaves. The addition of touch and other non-visual sensory inputs like smell and taste would make the illusion all the more powerful, but they're also the most difficult to imitate in VR.

Status of Haptics

Traditional haptic technologies rely on vibrating motors. Our smartphones use vibration as a form of output. Video game controllers (rumble packs, force feedback steering wheels, etc.) use vibration to enhance the gaming experience. But vibrations are a far cry from how we touch and feel in the real world.

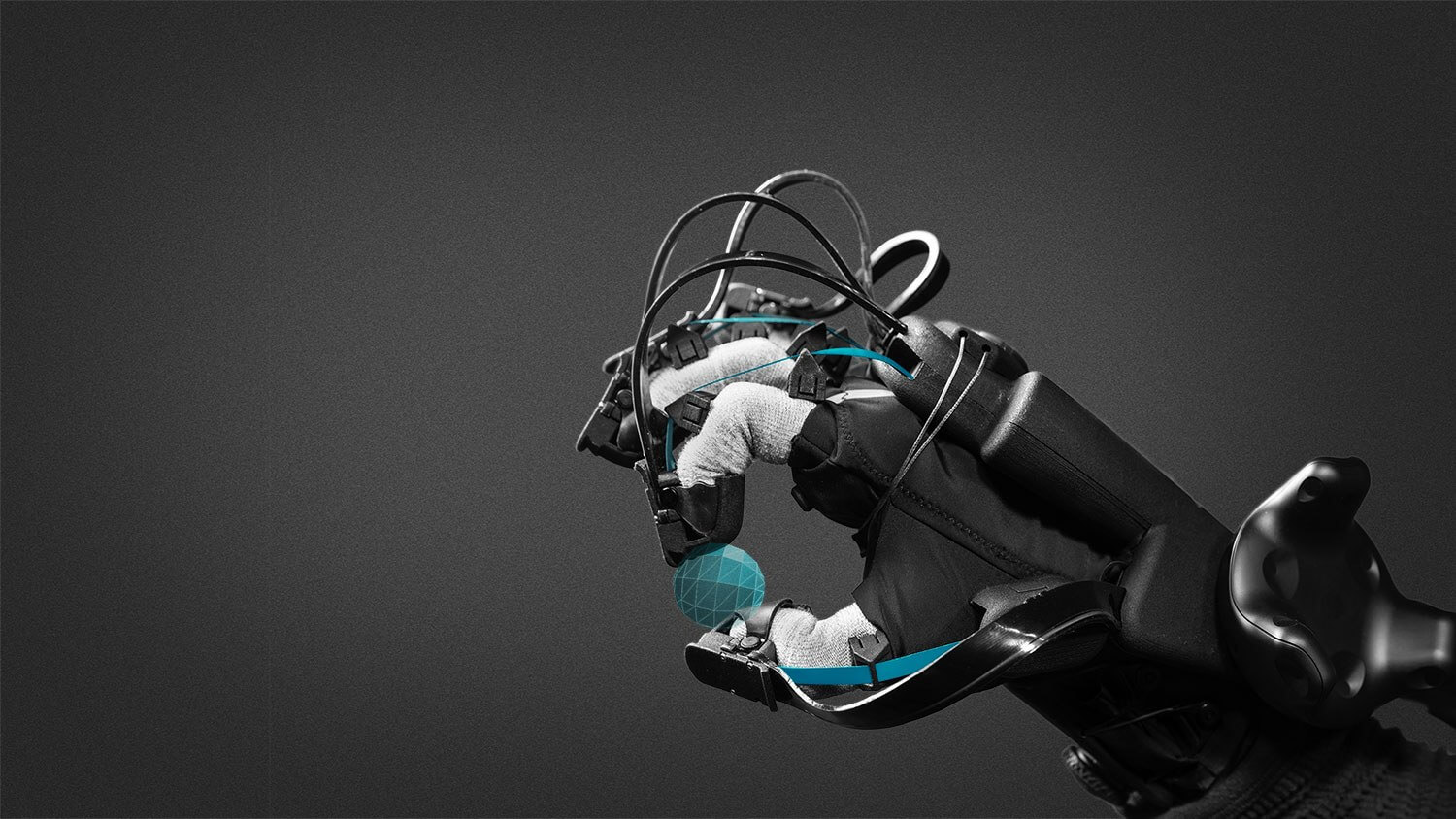

Engineers, researchers, and scientists around the globe are essentially trying to replicate human touch to realize more advanced and natural computing interfaces for a range of applications, including training, robotic control, and online shopping. They're working on three main types of haptic devices: Graspable (ex. joystick), wearable, and touchable (ex. skin patch). All three use electric actuators, pneumatics, and/or hydraulics to create tactile and kinesthetic sensations like pressure, resistance, and force. When it comes to immersive experiences, tech companies are mainly developing haptic gloves and suits. An example would be a glove that uses air to variably restrict and release your grip on a virtual object.

Though haptics is advancing, the sense of touch poses significant challenges in VR. Sight, hearing, taste, and smell all take place near or on our faces, but touch involves the entire body. We can fool our brains to some extent, but to create ultra-realistic training simulations capable of eclipsing all other methods of learning, it will take nothing short of simulating the laws of physics in the virtual world. Just imagine simulating the weight and heft of a real object or recreating millions of different textures in VR.

Why touch in VR

You can use a digital twin to see inside of a machine, but what if you could feel a loose bolt or the wear of an engine as it runs in real time? Designers and engineers could get more accurate feedback to improve ergonomics and user experience. Industrial training could be done entirely in risk-free virtual spaces, and surgical students could acquire legitimate surgical skills without going near a cadaver. Imagine shaking hands with a colleague on the other side of the world or humans using haptic-feedback robots to rescue people from a collapsed building. Articulated, near-human haptics could revolutionize the way we work, conduct international business, and respond to emergencies.

Haptics enables us to interact more naturally with virtual objects. By increasing the amount of information sent to the brain during a virtual experience, haptics adds to the sense of immersion. VR already has a much higher sensory impact than traditional training, and studies show that VR can change how someone thinks and behaves. The addition of touch and other non-visual sensory inputs like smell and taste would make the illusion all the more powerful, enabling true kinesthetic learning.

The players

That's not to say that today’s haptic devices aren’t capable or rapidly advancing. The technology is advanced enough for businesses to begin incorporating haptic gloves and other VR peripherals like controllers and treadmills into training, product design, sales, and other applications. Here are some of the companies working on wearable haptic devices:

HaptX

HaptX has focused on haptic gloves for VR training, simulation, and design since 2012. Its glove tech uses microfluids, force feedback, and precise motion tracking to provide realistic touch and natural interaction in VR. Silicone-based microfluidic skin panels expand or contract, with 130 pneumatic actuators in each glove, to push against a user’s skin the same way a real object would when touched. A lightweight exoskeleton provides up to four pounds of force to each finger, enhancing perception of the size, shape, and weight of virtual objects. Finally, magnetic motion tracking and a hand simulation system deliver sub-millimeter accuracy hand tracking with six degrees of freedom per finger. HaptX says Nissan and dozens of other companies have piloted its Gloves Development Kit to feel virtual product prototypes, build muscle memory in training sims, and even control robots.

BeBop Sensors

In October, BeBop Sensors announced the wireless, one-size-fits-all Forte Data Glove for the Oculus Quest. The haptic glove enables natural hand interactions and haptic feedback to create a more immersive training experience. BeBop’s website doesn’t get very technical but mentions that the company's sensors comprehend force, location, size, weight, bend, twist, and presence. The Forte Data Glove Enterprise Edition has a breathable open palm design, long battery life, and fast sensor speeds. Unnamed Fortune 500 companies are apparently using the glove for training, medical trials, robotics and drone control, CAD design, and more in VR.

bHaptics

TactSuit is a line of wireless haptic accessories, including a haptic face cushion for use with HMDs (Tactal), a $499 haptic vest for the torso (Tactot), and haptic sleeves (Tactosy) for the arms, hands, and feet—all made from a machine washable, anti-bacterial material and mesh lining. With over 70 haptic feedback points, the suit allows you to feel sensations from the virtual world all over your body. bHaptics's website mentions sensations like a snake winding around your body and the recoil of a gun, so they're clearly not targeting enterprise. The devices, however, are suitable for "martial arts, training content, and sports games."

HoloSuit

The HoloSuit is a motion capture suit consisting of a jacket, pair of gloves, and pants. It can be used to train for procedures in which the user's motor skills are important and for repetitive training, to help workers build muscle memory. The Pro version packs 36 embedded motion sensors, nine haptic motors, and six programmable buttons. Trainees can interact with and feel components in the virtual world, movement can be recorded, and captured data can show the user's progress over time.

Teslasuit

This next full-body suit is designed to improve the user's movement, reflexes, and instincts. With haptic feedback, motion capture, temperature control, and biometrics, Teslasuit is ideal for training for complex tasks and environments. The solution captures actions, establishing baselines so companies can track improvement over time, and helps determine ability to perform under pressure thanks to embedded ECG and Galvanic Skin Response sensors that track vitals and emotional stress. This requires direct skin contact, which means users have to strip down to put on the suit.

A new glove for business customers combines haptics, motion capture, biometry, and force feedback to gather real-time data as users perform dangerous tasks like fuel loading and emergency evacuation.

Manus VR

Manus VR’s wireless Prime Haptic gloves provide haptic signals depending on the type of material and how much force is applied in the virtual world. Internal circuity detects position of the finger joints, a Vive tracker on top of the glove reads hand positioning, and an inertial measurement unit works out the thumb’s rotation. Compatible with HTC Vive and Vive Pro, the gloves can serve as a fully articulated hand in virtual assembly or training.

Future-looking

Today's haptic gloves can imitate force, shape and texture to an extent. AI, advanced materials, and the application of other areas of technology like ultrasound tech will be critical for improving haptics enough for VR to supplant other forms of training. Current research and experiments are promising. If you're interested, check out Project H-Reality, Project Levitate, Heather Culbertson's work at the University of Southern California, NeoSensory, and Novasentis. Watch this space!